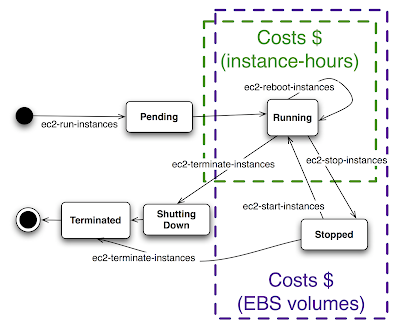

There are many posts about how to mount an EBS volume to your EC2 instance during the startup process. But requiring an instance to use a specific EBS volume has limitations that make the technique unsuitable for large-scale use. In this article I present a more flexible technique that uses an EBS snapshot instead.

Update December 2009: Amazon released native support for automatically mounting EBS volumes created from a snapshot, as part of supporting the boot-from-EBS feature. The techniques in this article are no longer necessary. But they’re cool anyway.

Limitations of Mounting an EBS Volume on Instance Startup

Mounting an EBS volume at startup is relatively straightforward (see the above-referenced posts for details). The main features of the procedure are:

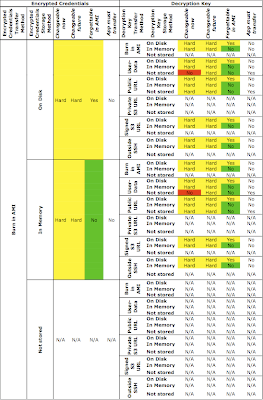

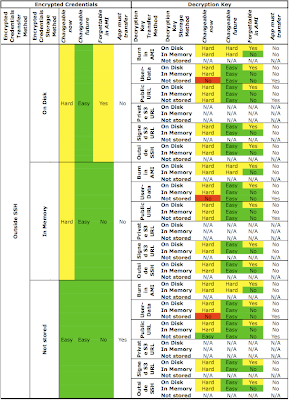

- The instance uses the EC2 API tools to attach the specified EBS volume. These tools, in turn, require Java and your EC2 credentials – your certificate and private key.

- Ideally, the AMI contains a hook to allow the EBS volume ID to be specified dynamically at startup time, either as a parameter in the user-data or retrieved from S3 or SimpleDB.

- The AMI should already contain (or its startup scripts should create) the appropriate references to the necessary locations on the mounted EBS volume. For example, if the volume is mounted at

/vol, then /var/lib/mysql (the default MySQL data directory) might be soft-linked (ln -s) or mount --binded to /vol/var/lib/mysql. Alternatively, the applications can be configured to use the locations on the mounted volume directly.

There are many benefits to mounting an EBS volume at instance startup:

- Avoid the need to burn a new AMI when the content on the instance’s disks changes.

- Gain the redundancy provided by an EBS volume.

- Gain the point-in-time backups provided by EBS snapshots.

- Avoid the need to store the updated content into S3 before instance shutdown.

- Avoid the need to copy and reconstitute the content from S3 to the instance during startup.

- Avoid paying for an instance to be always-on.

But mounting an EBS volume at startup also has important limitations:

- Instances must be launched in the same availability zone as the EBS volume. EBS volumes are availability-zone specific, and are only usable by instances running in the same availability zone. Large-scale deployments use instances in multiple availability zones to mitigate risk, so limiting the deployment to a single availability zone is not reasonable.

- There is no way to create multiple instances that each have the same EBS volume attached. When you need multiple instances that each have the same data, one EBS volume will not do the trick.

- As a corollary to the previous point, it is difficult to create Auto Scaling groups of AMIs that mount an EBS volume automatically because each instance needs its own EBS volume.

- It is difficult to automate the startup of a replacement instance when the original instance still has the EBS volume attached. Large-scale deployments need to be able to handle failure automatically because instances will fail. Sometimes instances will fail in a not-nice way, leaving the EBS volume attached. Detaching the EBS volume may require manual intervention, which is something that should be avoided if at all possible for large-scale deployments.

These limitations make the technique of mounting an EBS volume at startup unsuitable for large-scale deployments.

The Alternative: Mount an EBS Volume Created from a Snapshot at Startup

Instead of specifying an EBS volume to mount at startup, we can specify an EBS snapshot. At startup the instance creates a new EBS volume from the given snapshot and attaches the new volume to itself. The basic startup flow looks like this:

- If there is a volume already attached at the target location, do nothing – this is a reboot. Otherwise, continue to step 2.

- Create a new EBS volume from the specified snapshot. This requires the following:

- Java

- The EC2 API tools

- The EC2 account’s certificate and private key

- The EBS snapshot ID

- Attach the newly-created EBS volume and mount it to the mount point.

- Restore any filesystem pointers, if necessary, to point to the proper locations beneath the EBS volume’s mount point.

Like the technique of mounting an EBS volume, this technique should ideally support specifying the snapshot ID dynamically at instance startup time, perhaps via the user-data or retrieved from S3 or SimpleDB.

Why Mount an EBS Volume Created from a Snapshot at Startup?

As outlined above, the procedure is simple and it offers the following benefits:

- Instances need not be launched in the same availability zone as the EBS volume. However, instances are limited to using EBS snapshots that are in the same region (US or EU).

- Instances no longer need to rely on a specific EBS volume being available.

- Multiple instances can be launched easily, each of which will automatically configure itself with its own EBS volume made from the snapshot.

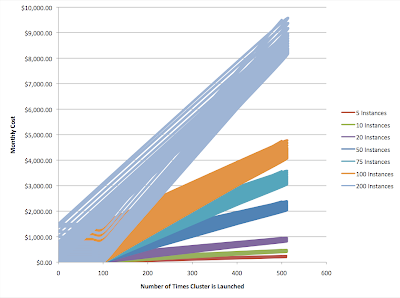

- Costs can be reduced by allowing “duplicate” EBS volumes to be provisioned only when they are needed by an instance. “Duplicate” EBS volumes are created on demand, and can also (optionally) be deleted during instance termination. Previously, you needed to keep around as many EBS volumes as the maximum number of simultaneous instances you would use.

- Large-scale deployments requiring content on an EBS volume are easy to build.

Here are some cool things that are made possible by this technique:

- MySQL replication slave (or cluster member) launching can be made more efficient. By specifying a recent snapshot of the master database’s EBS volume, the new MySQL slave instance will begin its life already containing most of the data. This slave will demand fewer resources from the master instance and will take less time to catch-up to the master. If you do plan to use this technique for launching MySQL slaves, see Eric Hammond’s article on EBS snapshots of a MySQL slave database in EC2 for some sage words of advice.

- Auto Scaling can launch instances that mount content stored in EBS at startup. If the auto-scaled instances all need to access common content that is stored in EBS, this technique allows you to duplicate that content onto each auto-scaled instance automatically. And, if the instance gets the snapshot ID from its user-data at startup, you can easily change the snapshot ID for auto-scaled instances by updating the launch configuration.

I am currently exploring how to combine this technique with the one discussed in my article about how to boot the entire instance from an EBS volume. Combining these approaches could provide the ability to “boot from a snapshot”, allowing you relate to bootable snapshots the same way you think about AMIs. Stay tuned to this blog for an article on this approach.

Caveats

Sounds great, huh? Despite these benefits, this technique can introduce a new problem: too many EBS volumes. As you may know, AWS limits the number of EBS volumes you can create to 20 (initially, and you can request a higher limit). This technique creates a new EBS volume each time an instance starts up, so your account will accumulate many EBS volumes. Plus, each volume will be almost indistinguishable from the others, mak

ing them difficult to track.

One potential way to distinguish the EBS volumes would be the ability to tag them via the API: Each instance would tag the volume upon creation, and these tags would be visible in the management interface to provide information about the origin of the volume. Unfortunately the EC2 API does not offer a way to tag EBS volumes. Until that feature is supported, use the ElasticFox Firefox extension to tag EBS volumes manually. I find it helpful to tag volumes with the creating instance’s ID and the instance’s “tag” security groups (see my article on using security groups to tag instances). ElasticFox displays the snapshot ID from which the volume was created and its creation timestamp, which are also useful to know.

As already hinted at, you will still need to think about what to do when the newly-created EBS volumes are no longer in use by the instance that created them. If you know you won’t need them, have a script to detach and delete the volume during instance shutdown (but not shutdown-before-reboot). Be aware that if an instance fails to terminate nicely the attached EBS volume may still exist and you will be charged for it.

In any case, make sure you keep track of your EBS volumes because the cost of keeping them around can add up quickly.

How to Mount an EBS Volume Created from a Snapshot on Startup

Now for the detailed instructions. Please note that the instructions below have been tested on Ubuntu 8.04, and should work on Debian or Ubuntu systems. If you are running a Red Hat-based system such as CentOS then some of the procedure may need to be adjusted accordingly.

There are four parts of getting set up:

- Setting up the Original Instance with an EBS volume

- Creating the EBS Snapshot

- Preparing the AMI

- Launching a New Instance

In the last step the new instance will create a new volume from the specified snapshot and mount it during startup.

Setting Up the Original Instance with an EBS volume

[Note: this section is based on the fine article about runing MySQL with EBS by Eric Hammond.]

Start out with an EC2 instance booted from an AMI that you like. I recommend one of the Alestic Ubuntu Hardy 8.04 Server AMIs. The instance will be assigned an instance ID (in this example i-11111111) and a public IP address (in this example 1.1.1.1).

ec2-run-instances -z us-east-1a --key MyKeypair ami-0772946e

ec2-describe-instances i-11111111 |

Once the ec2-describe-instances output shows that the instance is running, continue by creating an EBS volume. This command creates a 1 GB volume in the us-east-1a availability zone, which is the same zone in which the instance was launched. The volume will be assigned a volume ID (in this example vol-00000000).

ec2-create-volume -z us-east-1a -s 1

ec2-describe-volumes vol-0000000

Once the ec2-describe-volumes output shows that the volume is available, attach it to the instance:

ec2-attach-volume -d /dev/sdh -i i-11111111 vol-0000000

Next we can log into the instance and set it up. The following will install MySQL and the XFS filesystem drivers, and then mount and format the EBS volume. When prompted, specify a MySQL root password. If you are running a Canonical Ubuntu AMI you need to change the ssh username from root to ubuntu in these commands.

ssh -i id_rsa-MyKeypair root@1.1.1.1

sudo apt-get update && sudo apt-get upgrade -y

sudo apt-get install -y xfsprogs mysql-server

sudo modprobe xfs

sudo mkfs.xfs /dev/sdh

sudo mount -t xfs -o noatime /dev/sdh /vol

sudo mkdir /vol

sudo mount /vol

The EBS volume is now attached and formatted and MySQL is installed, so now we configure MySQL to use the EBS volume for its data, configuration, and logs:

sudo /etc/init.d/mysql stop

# Due to a minor MySQL bug this may be necessary - does not hurt

sudo killall mysqld_safe

export EBS_MOUNT_DIR=/vol

export EBS_EXPORTS="/etc/mysql /var/lib/mysql /var/log/mysql"

for i in $EBS_EXPORTS

do

EBS_MOUNTED_EXPORT_DIR="$EBS_MOUNT_DIR""$i"

sudo mkdir -p `dirname "$EBS_MOUNTED_EXPORT_DIR"`

sudo mv $i `dirname "$EBS_MOUNTED_EXPORT_DIR"`

sudo mkdir $i

sudo mount --bind "$EBS_MOUNTED_EXPORT_DIR" "$i"

done

sudo /etc/init.d/mysql start

# Needed later to hold our credentials for bundling an AMI

sudo -H mkdir ~/.ec2

Before we go on, we’ll make sure the EBS volume is being used by MySQL. The data directory on the EBS volume is /vol/var/lib/mysql so we should expect new databases to be created there.

mysql -u root -p -e create database db_on_ebs"

ls -l /vol/var/lib/mysql/

The listing should show that the new directory db_on_ebs was created. This proves that MySQL is using the EBS volume for its data store.

Creating the EBS Snapshot

All the above steps prepare the original instance and the EBS volume for being snapshotted. The following procedure can be used to snapshot the volume.

On the instance perform the following to stop MySQL and unmount the EBS volume:

sudo /etc/init.d/mysql stop

sudo umount /etc/mysql

sudo umount /var/log/mysql

sudo umount /var/lib/mysql

sudo umount /vol

Then, from your local machine, create a snapshot as follows. Remember the snapshot ID for later (snap-00000000 in this example).

ec2-create-snapshot vol-00000000

The snapshot is in progress and you can check its status with the ec2-describe-snapshots command.

Preparing the AMI

At this point in the procedure we have the following set up already:

- an instance that uses an EBS volume for MySQL files.

- an EBS volume attached to that instance, having the MySQL files on it.

- an EBS snapshot of that volume.

Now we are ready to prepare the instance for becoming an AMI. This AMI, when launched, will be able to create a new EBS volume from the snapshot and mount it at startup time.

First, from your local machine, copy your credentials to the EC2 instance:

scp -i id_rsa-MyKeypair pk-whatever1234567890.pem cert-whatever1234567890.pem root@1.1.1.1:~/.ec2/

Back on the EC2 instance install Java (skipping the annoying interactive license agreement) and the EC2 API tools:

export DEBIAN_FRONTEND=noninteractive

echo sun-java6-jdk shared/accepted-sun-dlj-v1-1 select true | sudo /usr/bin/debconf-set-selections

echo sun-java6-jre shared/accepted-sun-dlj-v1-1 select true | sudo /usr/bin/debconf-set-selections

sudo -E apt-get install -y unzip sun-java6-jdk

sudo -H wget -O ~/ec2-api-tools.zip http://s3.amazonaws.com/ec2-downloads/ec2-api-tools.zip && \

cd ~ && unzip ec2-api-tools.zip && ln -s ec2-api-tools-1.3-36506 ec2-api-tools

Note: Future versions of the EC2 API tools will have a different version number, and the above command will need to change accordingly.

Next, set up the script that does the create-volume-and-mount-it magic at startup. Download it from here with the following command:

sudo curl -Lo /etc/init.d/create-ebs-vol-from-snapshot-and-mount \

https://sites.google.com/site/shlomosfiles/clouddevelopertips/create-ebs-vol-from-snapshot-and-mount?attredirects=0

The script has a number of items to customize:

- The EC2 account credentials: Put a pointer to your private key and certificate file into the script in the appropriate place. If you followed the above instructions these will be in

/root/.ec2. Make sure the credentials are located on the instance’s root partition in order to ensure the keys are bundled into the AMI.

- The snapshot ID. This too can either be hard-coded into the script or, even better, provided as part of the user-data. It is controlled by the

EBS_VOL_FROM_SNAPSHOT_ID setting. See below for an example of how to specify and override this value via the user-data.

- The

JAVA_HOME directory. This is the location of the Java installation. On most linux distributions this should point to /usr/lib/jvm/java-6-sun .

- The

EC2_HOME directory. This is the location where the EC2 API tools are installed. If you followed the procedure above this will be /root/ec2-api-tools .

- The device attach point for the EBS volume. This is controlled by the

EBS_ATTACH_DEVICE setting, and is /dev/sdh in these instructions.

- The filesystem mount directory for the EBS volume. This is controlled by the

EBS_MOUNT_DIR setting, and is /vol in these instructions.

- The directories to be exported from the EBS volume. These are the directories that will be “mapped” to the root filesystem via

mount --bind. These are specified in the EBS_EXPORTS setting.

- If you are creating an AMI for the EU region, uncomment the line

export EC2_URL=https://eu-west-1.ec2.amazonaws.com by removing the leading #.

Once you customize the script, set it up to run upon startup as follows:

sudo chmod +x /etc/init.d/create-ebs-vol-from-snapshot-and-mount

sudo update-rc.d create-ebs-vol-from-snapshot-and-mount start S 89 .

As mentioned above, if you do not want the newly-created EBS volume to persist after the instance terminates you can configure the script to be run on shutdown, allowing it to delete the volume. One way of doing this is to create the AMI with a shutdown hook already in place. To do this:

sudo ln -s /etc/init.d/create-ebs-vol-from-snapshot-and-mount /etc/rc0.d/K32create-ebs-vol-from-snapshot-and-mount

Alternatively, you can defer this decision to instance launch time, by passing in the above command via a user-data script – see below for more on this.

Remember: Running this script as part of the shutdown process as described above will delete the EBS volume. If you do not want this to happen automatically, don’t execute the above command. If you mistakenly ran the above command you can fix things as follows:

sudo rm /etc/rc0.d/K32create-ebs-vol-from-snapshot-and-mount

Next is a little cleanup before bundling:

# New instances need their own host keys generated at first boot

chmod +x ec2-ssh-host-key-gen

# New instances should not contain leftovers from this instance

sudo rm -f /root/.*hist*

sudo rm -f /var/log/*.gz

sudo find /var/log -name mysql -prune -o -type f -print | \

while read i; do sudo cp /dev/null $i; done

The instance is now ready to be bundled into an AMI, uploaded, and registered. The commands below show this process. For more about the considerations when bundling an AMI see the this article by Eric Hammond.

bucket=com.mybucket.images

prefix=ubuntu-hardy-32bit-attach-snapshot-at-startup-for-mysql-20090805

export AWS_USER_ID=MY-USER-ID

export AWS_ACCESS_KEY_ID=MY-ACCESS-KEY

export AWS_SECRET_ACCESS_KEY=MY-SECRET-ACCESS-KEY

arch=i386

sudo -E ec2-bundle-vol \

-r $arch \

-d /mnt \

-p $prefix \

-u $AWS_USER_ID \

-k ~/.ec2/pk-*.pem \

-c ~/.ec2/cert-*.pem \

-s 10240 \

-e /mnt,/tmp,/root/.ssh

ec2-upload-bundle \

-b $bucket \

-m /mnt/$prefix.manifest.xml \

-a $AWS_ACCESS_KEY_ID \

-s $AWS_SECRET_ACCESS_KEY

Once the bundle has uploaded successfully, register it from your local machine as follows:

bucket=com.mybucket.images

prefix=ubuntu-hardy-32bit-attach-snapshot-at-startup-for-mysql-20090805

ec2-register $bucket/$prefix.manifest.xml

The ec2-register command displays an AMI ID (ami-012345678 in this example).

We are finally ready to test it out!

Launching a New Instance

Now we are ready to launch a new instance that creates and mounts an EBS volume from the snapshot. The snapshot ID is configurable via the user-data payload specified at instance launch time. Here is an example user-data payload showing how to specify the snapshot ID:

EBS_VOL_FROM_SNAPSHOT_ID=snap-00000000

Note that the format of the user-data payload is compatible with the Running User-Data Scripts technique – just make sure the first line of the user-data payload begins with a hashbang #! and that the EBS_VOL_FROM_SNAPSHOT_ID setting is located somewhere in the payload, at the beginning of a line.

Launch an instance of the AMI with the user-data specifying the snapshot ID, in a different availability zone. The instance will be assigned an instance ID (in this example i-22222222) and a public IP address (in this example 2.2.2.2).

ec2-run-instances -z us-east-1c --key MyKeypair \

-d "EBS_VOL_FROM_SNAPSHOT_ID=snap-00000000" ami-012345678

ec2-describe-instances i-22222222

Once the ec2-describe-instances output shows that the instance is running, check for the new EBS volume that should have been created from the snapshot (in this example, vol-22222222) in the new availability zone.

ec2-describe-volumes

Finally, ssh into the instance and verify that it is now working from the new EBS volume:

ssh -i id_rsa-MyKeypair root@2.2.2.2

mysql -u root -p -e “show databases”

You should see the db_on_ebs database in the results. This demonstrates that the startup sequence successfully created a new EBS volume, attached and mounted it, and set itself up to use the MySQL data on the EBS volume.

Cleaning Up

Don’t forget to clean up the pieces of this procedure when you no longer need them:

# the original instance

ec2-terminate-instances i-11111111

# the original EBS volume

ec2-delete-volumes vol-00000000

# the instance that created a new volume from the snapshot

ec2-terminate instances i-22222222

If you set up the shutdown hook to delete the EBS volume then you can verify that this works by checking that the ec2-describe-volumes output no longer contains the new EBS volume. Otherwise, delete it manually:

# the new volume created from the snapshot

ec2-delete-volumes vol-22222222

And don’t forget to un-register the AMI and delete the files from S3 when you are done. These steps are not shown.

Making Changes to the Configuration

Now that you have a configuration using EBS snapshots which is easily scalable to any availability zone, how do you make changes to it?

Let’s say you want to add a web server to the AMI and your web server’s static content to the EBS volume. (I generally don’t recommend storing your web-layer data in the same place as your database storage, but this example serves as a useful illustration.) You would need to do the following:

- Launch an instance of the AMI specifying the snapshot ID in the user-data.

- Install the web server on the instance.

- Put your web server’s static content onto the instance (perhaps from S3) and test that the web server works.

- Stop the web server.

- Move the web server’s static content to the EBS volume.

- “

mount --bind” the EBS locations to the original directories without adding entries to /etc/fstab.

- Restart the web server and test that the web server still works.

- Edit the startup script, adding entries for the web server’s directories to

EBS_EXPORTS.

- Stop the web server and unmount (

umount) all the mount bind directories and the EBS volume.

- Remove the

mount bind and /vol entries for the EBS exported directories from /etc/fstab.

- Perform the cleanup prior to bundling.

- Bundle and upload the new AMI.

- Create a new snapshot of the EBS volume.

- Change your deployment configurations to start using the new AMI and the new snapshot ID.

If you decide that you would like the automatically-created EBS volumes to be deleted when the instances terminate, you have two ways to do this:

- Execute this command

sudo ln -s /etc/init.d/create-ebs-vol-from-snapshot-and-mount \

/etc/rc0.d/K32create-ebs-vol-from-snapshot-and-mount

and rebundle the AMI.

- Pass the above command to the instance via a user-data script. The user-data could also specify the snapshot ID, and might look like this:

#! /bin/bash

EBS_VOL_FROM_SNAPSHOT_ID=snap-00000000

ln -s /etc/init.d/create-ebs-vol-from-snapshot-and-mount \

/etc/rc0.d/K32create-ebs-vol-from-snapshot-and-mount

The technique of mounting an EBS volume created from a snapshot at startup was born of necessity: I needed a way to allow many instances across availability zones to share the same content which lives on an EBS drive. This article shows how you can apply the technique to your deployments. If you also find this technique useful, please share it in the comments!

Thanks

Eric Hammond reviewed early drafts of this article and provided valuable feedback. Thanks!