How can you handle DNS lookups in EC2 without going crazy each time a resource’s IP address changes? One solution is to use an Elastic IP, a stable IP address that can be remapped to different instances, but Elastic IPs are not appropriate for all situations. This article explores the various methods of managing name resolution with EC2 instances.

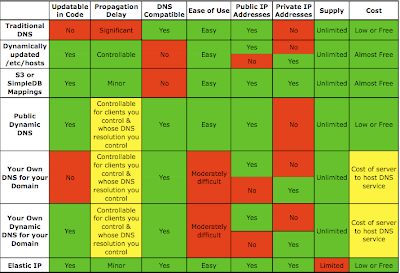

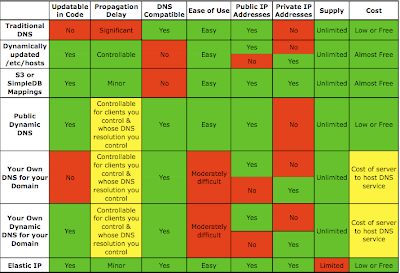

Features of Different Name Resolution Methods

Before diving into the methods themselves let’s take a look at the factors to consider when evaluating methods of managing name resolution. Here are the factors:

- Updatable in code. You will want to write code to make changes to the name resolution settings automatically, in response to infrastructure events (e.g. launching a new server).

- Propagation delay. It can take some time for changes to name resolution settings to propagate (especially with DNS). A solution should offer some degree of assurance that changes will propagate within a known and reasonable period of time. [Note that some clients (e.g. the IE browser or the Java rutime) by default ignore the DNS TTL, artificially increasing the propagation delay for DNS-based methods.]

- Compatible with DNS. If your service will be accessed by a web browser or other client that you do not control, your name resolution method will need to be compatible with DNS. Otherwise clients will not be able to resolve your hostnames properly.

- Ease of implementation. Some solutions, while technically sufficient, are difficult to implement.

- Public / Private IP addresses. Whether the solution can serve public and/or private IP addresses. If your clients are inside the same EC2 region then you want their lookups to resolve to the private IP address. Clients outside the same EC2 region should be served the public IP address.

- Supply. Is there any practical limitation on the number of name resolution entries?

- Cost. How much it costs to implement, including costs for idle resources and updating settings.

Methods of Name Resolution

As mentioned above, there are a number of different methods to manage name resolution. These are:

- Traditional DNS.

- Dynamically update the /etc/hosts file on the various application hosts. The /etc/hosts file on linux (like the C:\Windows\System32\Drivers\etc\hosts file on Windows) contains host-name-to-IP-address mappings that are checked before DNS is consulted, allowing it to override DNS. The file can be updated via pull (initiated by the host) or push (initiated by an external agent).

- Store the mappings in S3 or SimpleDB. Clients must use the S3 or SimpleDB APIs for name resolution.

- Use a dynamic DNS provider.

- Run your own traditional DNS servers for your domain. Clients must be able to see these DNS servers.

- Run your own dynamic DNS servers for your domain. Clients must be able to see these DNS servers.

- Elastic IPs. The AWS pricing model discourages (though not strongly enough, I believe) Elastic IPs from being left unused, so you should use them for instances hosting services that are always on, such as your web server or your Facebook application. You should set up a DNS entry pointing the host names to the Elastic IPs, and then any remapping of the Elastic IP to a different instance happens via the EC2 API without requiring any change to DNS.

Here is a table (click on it to see it full size) showing how each of these name resolution methods stack up against each other:

Notes:

- Dynamically updating /etc/hosts can be used to store either the public IP or the private IP but not both for the same client. You can use one /etc/hosts file for your clients inside the same EC2 region which contains the private IPs, and a different but corresponding /etc/hosts file for your clients outside the EC2 region (or outside EC2 completely) which contains the public IPs. The propagation delay is governed by the frequency with which you update the /etc/hosts file on each client. You can minimize this delay by increasing the frequency of updates. This technique is described in detail in an article by Tim Dysinger.

- Similarly, the two “run your own DNS” methods (Your Own DNS for your Domain, Your Own Dynamic DNS for your Domain) can be used to resolve to either the public IP address or the private IP address, but not both for the same client. You should set up your clients inside EC2 to utilize the DNS service inside EC2, and the domain should be configured to point to the DNS service running outside EC2 so that clients outside EC2 will see the public IPs. Note that clients running inside EC2 whose DNS resolution you do not control (for example, another EC2 user’s client) will be referred to the public IPs. Jeff Roberts offers some great practical suggestions for running your own DNS inside EC2.

This table demonstrates the following:

- Elastic IPs are the best choice when you need only a limited number of resolvable names and you will use them constantly. If you use their corresponding DNS name then they intelligently resolve to the public IP when looked up from the internet and to the private IP when looked up from within EC2.

- If you need an unlimited number of resolvable names within EC2 then you should run your own dynamic DNS within EC2.

- Methods that are incompatible with DNS should only be used with clients you control.

As we can see, Dynamic DNS (especially running your own) has one distinct advantage over using Elastic IPs: unlimited supply at no cost when unused.

When Running Your Own Dynamic DNS is Better than Elastic IPs

One application for running your own Dynamic DNS is a testing environment that includes large clusters of EC2 instances, for example database cluster or application nodes, connected to web layer instance(s). These cluster instances will only be visible to the front-end web tier, so they do not need a publicly resolvable IP address. And your testing environment is not likely to be running all the time. Elastic IPs would work here (presuming you needed only 5 or you could convince AWS to increase your Elastic IP limit to meet your needs), but would cost money when unused. A more economical solution might be to use your own Dynamic DNS within EC2 for these instances. If you have spare capacity on an existing instance then you can put the Dynamic DNS service there – otherwise you will need another instance, making the cost less attractive. In any case you’ll need the instance hosting the Dynamic DNS to have an Elastic IP to allow failover without affecting the clients. And you’ll need a script to dynamically configure the /etc/resolv.conf on your EC2 clients to point to the private IP address of the Dynamic DNS instance by looking up its Elastic IP’s DNS name.

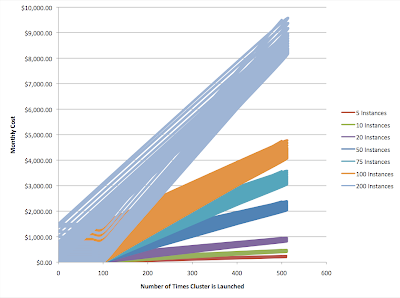

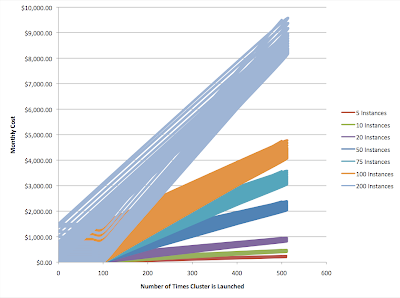

Let’s compare the monthly costs of using Elastic IPs with the costs of running your own dynamic DNS for a testing environment such as the above. The cost reflects the following ingredients and assumptions:

- The number of hours over the month that allocated addresses (DNS entries) are not associated with a live instance, in total for all allocated addresses. If you have DNS entries / addresses and leave them unmapped for 10 hours each then you have 100 unmapped hours.

- The number of changes to the DNS mappings made that month.

- The fractional cost of running an instance just to serve the dynamic DNS. If you have spare capacity on an existing instance then this is the instance cost multiplied by the fraction of the capacity that the dynamic DNS service uses. If you need to spin up a dedicated instance for the dynamic DNS service then this is the entire cost of that instance.

- Pricing for Elastic IPs: free when in use. 1 cent per hour unused. First 100 remaps per month free, 10 cents per remap afterward.

It should be obvious that using dynamic DNS for this testing environment will be economical when

FractionalDNSInstanceCost < NumUnmappedHours * 0.01 + MAX(NumMappingChanges – 100, 0) * 0.1

For simplicity’s sake this can be rewritten in clearer terms:

FractionalDNSInstanceCost < NumInstances * ( NumHoursClusterUnused * 0.01 + MAX(NumTimesClusterIsLaunched – 100, 0) * 0.1)

Right about now I’m wishing Excel had better 3-D graphing capabilities. Here’s something helpful to visualize this:

The chart shows the monthly cost of running clusters of different sizes according to how many times the cluster is launched. The color “bands” show the areas in which the monthly cost lies, depending on how many hours the cluster remains unused. For a given number of times launched (i.e. for a given vertical line), the “bottom” point of each band is the cost when the cluster is unused zero hours (i.e. always on), and the “top” point is the cost when the cluster is unused for 500 hours (about 20 days).

The dominant factors are, first, the number of instances in the cluster and, second, the number of times the cluster will be launched. A cluster of 100 instances costs $10 each time it is launched beyond the first 100, (plus $1 for each hour unused). For large cluster sizes, the more times you launch, the higher the cost of using Elastic IPs will be and the more attractive the run-your-own dynamic DNS option becomes.