This is a true story about a lot of data. The cast of characters is as follows:

The Protagonist: Me.

The Hero: Jets3t, a Java library for using Amazon S3.

The Villain: Decisions made long ago, for forgotten reasons.

Innocent Bystanders: My client.

Once Upon a Time…

Amazon S3 is a great place to store media files and allows these files to be served directly from S3, instead of from your web server, thereby saving your server’s network and CPU for more important tasks. There are some gotchas with serving files directly from S3, and it is these gotchas that had my client locked in to paying for bandwidth and CPU to serve media files directly from his web server.

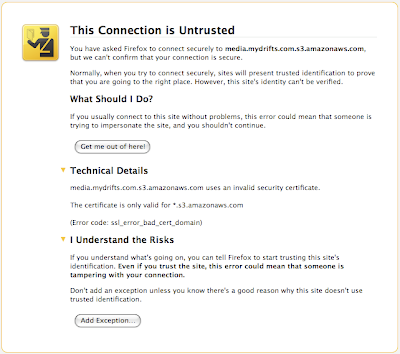

You see, a few years ago when my client first created their S3 bucket, they named it media.example.com. Public objects in that bucket could be accessed via the URL http://s3.amazonaws.com/media.example.com/objectKey or via the Virtual Host style URL http://media.example.com.s3.amazonaws.com/objectKey. If you’re just serving images via HTTP then this can work for you. But you might have a good reason to convince the browser that all the media is being served from your domain media.example.com (for example, when using Flash, which requires an appropriately configured crossdomain.xml). Using a URL that lives at s3.amazonaws.com or a subdomain of that host will not suffice for these situations.

Luckily, S3 lets you set up your DNS in a special manner, convincing the world that the same object lives at the URL http://media.example.com/objectKey. All you need to do is to set up a DNS CNAME alias pointing media.example.com to media.example.com.s3.amazonaws.com. The request will be routed to S3, which will look at the HTTP Host header and discover the bucket name media.example.com.

So what’s the problem? That’s all great for bucket with a name that works in DNS. But it won’t work for a bucket whose name is Bucket.example.com, because DNS is case insensitive. There are limitations on the name of a bucket if you want to use the DNS alias. This is where we reveal a secret: the bucket was not really named media.example.com. For some reason nobody remembers, the bucket was named Media.example.com – with a capital letter, which is invalid in DNS entries. This makes all the difference in the world, because S3 cannot serve this bucket via the Virtual Host method – you get a NoSuchBucket error when you try to access http://Media.example.com.s3.amazonaws.com/objectKey (equivalent to http://Media.example.com/objectKey with the appropriate DNS CNAME in place).

As a workaround my client developed an application that dynamically loaded the media onto the server and served it directly from there. This server serviced media.example.com, and it would essentially do the following for each requested file:

- Do we already have this objectKey on our local filesystem? If yes, go to step 3.

- Fetch the object from S3 via

http://s3.amazonaws.com/Media.example.com/objectKey and save it to the local filesystem.

- Serve the file from the local filesystem.

This workaround allowed the client to release URLs that looked correct, but required using a separate server for the job. It costs extra time (when there is a cache miss) and money (to operate the server).

The challenge? To remove the need for this caching server and allow the URLs to be served directly from S3 via media.example.com.

Just Move the Objects, Right?

It might seem obvious: Why not simply move the objects to a correctly-named bucket? Turns out that’s not quite so simple to do in this case.

Obviously, if I was dealing with a few hundred, thousand, or even tens of thousands of objects, I could use a GUI tool such as CloudBerry Explorer or the S3Fox Organizer Firefox Extension. But this client is a popular web site, and has been storing media in the bucket for a few years already. They had 5 billion objects in the bucket (which is 5% of the total number of objects in S3). These tools crashed upon viewing the bucket. So, no GUI for you.

S3 is a hosted object store system. Why not just use its MOVE command (via the API) to move the objects from the wrong bucket to the correctly-named bucket? Well, it turns out that S3 has no MOVE command.

Thankfully, S3 has a COPY command which allows you to copy an object on the server-side, without downloading the object’s contents and uploading them again to the new location. Using some creative programming you can put together a COPY and a DELETE (only if the COPY succeeded!) to simulate a MOVE. I tried using the boto Python library but it choked on manipulating any object in the bucket name Media.example.com – even though it’s a legal name, it’s just not recommended – so I couldn’t use this tool. The Java-based Jets3t library was able to handle this unfortunate bucket name just fine, and it also provides a convenience method to move objects via COPY and DELETE. The objects in this bucket are immutable, so we don’t need to worry about consistency.

So I’m all set with Jets3t.

Or so I thought.

First Attempt: Make a List

My first attempt was to:

- List all the objects in the bucket and put them in a database.

- Run many client programs that requested the “next” object key from the database and deleted the entry from the database when it was successfully moved to the correctly-named bucket.

This approach would provide a way to make sure all the objects were moved successfully.

Unfortunately, listing so many objects took too long – I allowed a process to list the bucket’s contents for a full 24 hours before killing it. I don’t know how far it got, but I didn’t feel like waiting around for it to finish dumping its output to a file, then waiting some more to import the list into a database.

Second Attempt: Make a Smaller List

I thought about the metadata I had: The objects in the bucket all had object keys with a particular structure:

/binNumber/oneObjectKey

binNumber was a number from 0 to 4.5 million, and each binNumber served as the prefix for approximately 1200 objects (which works out to 5.4 billion objects total in the bucket). The names of these objects were essentially random letters and numbers after the binNumber/ component. S3 has a list objects with this prefix method. Using this method you can get a list of object keys that begin with a specific prefix – which is perfect for my needs, since it will return a list of very manageable size.

So I coded up something quick in Java using Jets3t. Here’s the initial code snippet:

public class MoveObjects {

private static final String AWS_ACCESS_KEY_ID = .... ;

private static final String AWS_SECRET_ACCESS_KEY = .... ;

private static final String SOURCE_BUCKET_NAME = "Media.example.com";

private static final String DEST_BUCKET_NAME = "media.example.com";

public static void main(String[] args) {

AWSCredentials awsCredentials = new AWSCredentials(AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY);

S3Service restService = new RestS3Service(awsCredentials);

S3Bucket sourceBucket = restService.getBucket(SOURCE_BUCKET_NAME);

final String delimiter = "/";

String[] prefixes = new String[...];

for (int i = 0; i < prefixes.length; ++i) {

// fill the list of binNumbers from the command-line args (not shown)

prefixes[i] = String.valueOf(...);

}

ExecutorService tPool = Executors.newFixedThreadPool(32);

long delay = 50;

for (String prefix : prefixes) {

S3Object[] sourceObjects = restService.listObjects(sourceBucket, prefix + delimiter, delimiter);

if (sourceObjects != null && sourceObjects.length > 0) {

System.out.println(" At key " + sourceObjects[0].getKey() + ", this many: " + sourceObjects.length);

for (int i = 0; i < sourceObjects.length; ++i) {

final S3Object sourceObject = sourceObjects[i];

final String sourceObjectKey = sourceObject.getKey();

sourceObject.setAcl(AccessControlList.REST_CANNED_PUBLIC_READ);

Mover mover = new Mover(restService, sourceObject, sourceObjectKey);

while (true) {

try {

tPool.execute(mover);

delay = 50;

break;

} catch (RejectedExecutionException r) {

System.out.println("Queue full: waiting " + delay + " ms");

Thread.sleep(delay); // backoff and retry

delay += 50;

}

}

}

}

}

tPool.shutdown();

tPool.awaitTermination(360000, TimeUnit.SECONDS);

System.out.println(" Completed!");

}

private static class Mover implements Runnable {

final S3Service restService;

final S3Object sourceObject;

final String sourceObjectKey;

Mover(final S3Service restService, final S3Object sourceObject, final String sourceObjectKey) {

this.restService = restService;

this.sourceObject = sourceObject;

this.sourceObjectKey = sourceObjectKey;

}

public void run() {

Map moveResult = null;

try {

moveResult = restService.moveObject(SOURCE_BUCKET_NAME, sourceObjectKey, DEST_BUCKET_NAME, sourceObject, false);

if (moveResult.containsKey("DeleteException")) {

System.out.println("Error: " + sourceObjectKey);

}

} catch (S3ServiceException e) {

System.out.println("Error: " + sourceObjectKey + " EXCEPTION: " + e.getMessage());

}

}

}

}

The code uses an Executor to control a pool of threads, each of which is given a single object to move which is encapsulated in a Mover. All objects with a given prefix (binNumber) are listed and then added to the Executor’s pool to be moved. The initial setup of Jets3t with the credentials and building the array of prefixes is not shown.

We need to be concerned that the thread pool will fill up faster than we can handle the operations we’re enqueueing, so we have backoff-and-retry logic in that code. But, notice we don’t care if a particular object’s move operation fails. This is because we will run the same program again a second time, after covering all the binNumber prefixes, to catch any objects that have been left behind (and a third time, too – until no more objects are left in the source bucket).

I ran this code on an EC2 m1.xlarge instance in two simultaneous processes, each of which was given half of the binNumber prefixes to work with. I settled on 32 threads in the thread pool after a few experiments showed this number ran the fastest. I made sure to set the proper number of underlying HTTP connections for Jets3t to use, with these arguments: -Ds3service.max-thread-count=32 -Dhttpclient.max-connections=60 . Things were going well for a few hours.

Third Attempt: Make it More Robust

After a few hours I noticed that the rate of progress was slowing. I didn’t have exact numbers, but I saw that things were just taking longer in minute 350 than they had taken in minute 10. I could have taken on the challenge of debugging long-running, multithreaded code. Or I could hack in a workaround.

The workaround I chose is to force the program to terminate every hour, and to restart itself. I added the following code to the main method:

// exit every hour

Timer t = new Timer(true);

TimerTask tt = new TimerTask() {

public void run() {

System.out.println("Killing myself!");

System.exit(42);

}

};

final long dieMillis = 3600 * 1000;

t.schedule(tt, dieMillis);

And I wrapped the program in a “forever” wrapper script:

#! /bin/bash

while true; do

DATE=`date`

echo $DATE: $0: launching $*

$* 2>&1

done

This script is invoked as follows:

ARGS=...

./forever.sh nohup java -Ds3service.max-thread-count=32 -Dhttpclient.max-connections=60 -classpath bin/:lib/jets3t-0.7.2.jar:lib/commons-logging-1.1.1.jar:lib/commons-httpclient-3.1.jar:lib/commons-codec-1.3.jar com.orchestratus.s3.MoveObjects $ARGS >> nohup.out 2>&1 &

Whenever the Java program terminates, the forever wrapper script re-launches it with the same arguments. This works properly because the only objects that will be left in the bucket will be those that haven’t been deleted yet. Eventually, this ran to completion and the program would start, check all its binNumber prefixes, find nothing, exit, restart, find nothing, exit, restart, etc.

The whole process took 5 days to completely move all objects to the new bucket. Then I gave my client the privilege of deleting the Media.example.com bucket.

Lessons Learned

Here are some important lessons I learned and reinforced through this project.

Use the metadata to your benefit

Sometimes the only thing you know about a problem is its shape, not its actual contents. In this case I knew the general structure of the object keys, and this was enough to go on even if I couldn’t discover every object key a priori. This is a key principle when working with large amounts of data: the metadata is your friend.

Robustness is a feature

It took a few iterations until I got to a point where things were running consistently fast. And it took some advanced planning to design a scheme that would gracefully tolerate failure to move some objects. But without these features I would have had to manually intervene when problems arose. Don’t let intermittent failure delay a long-running process.

Sometimes it doesn’t pay to debug

I used an ugly hack workaround to force the process to restart every hour instead of debugging the actual underlying problem causing it to gradually slow down. For this code, which was one-off code that I wrote for my specific circumstances, I decided this was a more effective approach than getting bogged down making it correct. It works fast when brute-forced, so it didn’t need to be truly corrected.

Repeatability

I’ve been thinking about how someone would repeat my experiments and discover improvements to the techniques I employed. We could probably get by without actually copying and deleting the objects, rather we could perform two successive calls – perhaps to get different metadata headers. We’d need some public S3 bucket with many millions of objects in it to make a comparable test case. And we’d need an S3 account willing to let users play in it.

Any takers?