Amazon S3 is a great place to store static content for your web site. If the content is sensitive you’ll want to prevent the content from being visible while in transit from the S3 servers to the client. The standard way to secure the content during transfer is by https – simply request the content via an https URL. However, this approach has a problem: it does not work for content in S3 buckets that are accessed via a virtual host URL. Here is an examination of the problem and a workaround.

Accessing S3 Buckets via Virtual Host URLs

S3 provides two ways to access your content. One way uses s3.amazonaws.com host name URLs, such as this:

http://s3.amazonaws.com/mybucket.mydomain.com/myObjectKey

The other way to access your S3 content uses a virtual host name in the URL:

http://mybucket.mydomain.com.s3.amazonaws.com/myObjectKey

Both of these URLs map to the same object in S3.

You can make the virtual host name URL shorter by setting up a DNS CNAME that maps mybucket.mydomain.com to mybucket.mydomain.com.s3.amazonaws.com. With this DNS CNAME alias in place, the above URL can also be written as follows:

http://mybucket.mydomain.com/myObjectKey

This shorter virtual host name URL works only if you setup the DNS CNAME alias for the bucket.

Virtual host names in S3 is a convenient feature because it allows you to hide the actual location of the content from the end-user: you can provide the URL http://mybucket.mydomain.com/myObjectKey and then freely change the DNS entry for mybucket.mydomain.com (to point to an actual server, perhaps) without changing the application. With the CNAME alias pointing to mybucket.mydomain.com.s3.amazonaws.com, end-users do not know that the content is actually being served from S3. Without the DNS CNAME alias you’ll need to explicitly use one of the URLs that contain s3.amazonaws.com in the host name.

The Problem with Accessing S3 via https URLs

https encrypts the transferred data and prevents it from being recovered by anyone other than the client and the server. Thus, it is the natural choice for applications where protecting the content in transit is important. However, https relies on internet host names for verifying the identity certificate of the server, and so it is very sensitive to the host name specified in the URL.

To illustrate this more clearly, consider the servers at s3.amazonaws.com. They all have a certificate issued to *.s3.amazonaws.com. [“Huh?” you say. Yes, the SSL certificate for a site specifies the host name that the certificate represents. Part of the handshaking that sets up the secure connection ensures that the host name of the certificate matches the host name in the request. The * indicates a wildcard certificate, and means that the certificate is valid for any subdomain also.] If you request the https URL https://s3.amazonaws.com/mybucket.mydomain.com/myObjectKey, then the certificate’s host name matches the requested URL’s host name component, and the secure connection can be established.

If you request an object in a bucket without any periods in its name via a virtual host https URL, things also work fine. The requested URL can be https://aSimpleBucketName.s3.amazonaws.com/myObjectKey. This request will arrive at an S3 server (whose certificate was issued to *.s3.amazonaws.com), which will notice that the URL’s host name is indeed a subdomain of s3.amazonaws.com, and the secure connection will succeed.

However, if you request the virtual host URL https://mybucket.mydomain.com.s3.amazonaws.com/myObjectKey, what happens? The host name component of the URL is mybucket.mydomain.com.s3.amazonaws.com, but the actual server that gets the request is an S3 server whose certificate was issued to *.s3.amazonaws.com. Is mybucket.mydomain.com.s3.amazonaws.com a subdomain of s3.amazonaws.com? It depends who you ask, but most up-to-date browsers and SSL implementations will say “no.” A multi-level subdomain – that is, a subdomain that has more than one period in it – is not considered to be a proper subdomain by recent Firefox, Internet Explorer, Java, and wget clients. So the client will report that the server’s SSL certificate, issued to *.s3.amazonaws.com, does not match the host name of the request, mybucket.mydomain.com.s3.amazonaws.com, and refuse to establish a secure connection.

The same problem occurs when you request the virtual host https URL https://mybucket.mydomain.com/myObjectKey. The request arrives – after the client discovers that mybucket.mydomain.com is a DNS CNAME alias for mybucket.mydomain.com.s3.amazonaws.com – at an S3 server with an SSL certificate issued to *.s3.amazonaws.com. In this case the host name mybucket.mydomain.com clearly does not match the host name on the certificate, so the secure connection again fails.

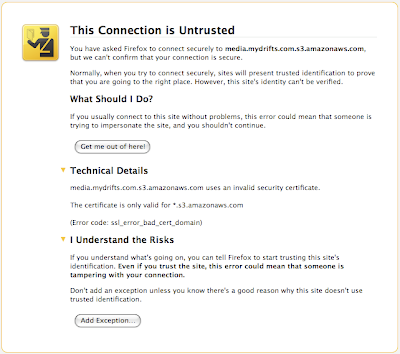

Here is what a failed certificate check looks like in Firefox 3.5, when requesting https://images.mydrifts.com.s3.amazonaws.com/someContent.txt:

Here is what happens in Java:

javax.net.ssl.SSLHandshakeException: java.security.cert.CertificateException: No subject alternative DNS name matching media.mydrifts.com.s3.amazonaws.com found.

at com.sun.net.ssl.internal.ssl.Alerts.getSSLException(Alerts.java:174)

at com.sun.net.ssl.internal.ssl.SSLSocketImpl.fatal(SSLSocketImpl.java:1591)

at com.sun.net.ssl.internal.ssl.Handshaker.fatalSE(Handshaker.java:187)

at com.sun.net.ssl.internal.ssl.Handshaker.fatalSE(Handshaker.java:181)

at com.sun.net.ssl.internal.ssl.ClientHandshaker.serverCertificate(ClientHandshaker.java:975)

at com.sun.net.ssl.internal.ssl.ClientHandshaker.processMessage(ClientHandshaker.java:123)

at com.sun.net.ssl.internal.ssl.Handshaker.processLoop(Handshaker.java:516)

at com.sun.net.ssl.internal.ssl.Handshaker.process_record(Handshaker.java:454)

at com.sun.net.ssl.internal.ssl.SSLSocketImpl.readRecord(SSLSocketImpl.java:884)

at com.sun.net.ssl.internal.ssl.SSLSocketImpl.performInitialHandshake(SSLSocketImpl.java:1096)

at com.sun.net.ssl.internal.ssl.SSLSocketImpl.startHandshake(SSLSocketImpl.java:1123)

at com.sun.net.ssl.internal.ssl.SSLSocketImpl.startHandshake(SSLSocketImpl.java:1107)

at sun.net.www.protocol.https.HttpsClient.afterConnect(HttpsClient.java:405)

at sun.net.www.protocol.https.AbstractDelegateHttpsURLConnection.connect(AbstractDelegateHttpsURLConnection.java:166)

at sun.net.www.protocol.http.HttpURLConnection.getInputStream(HttpURLConnection.java:977)

at java.net.HttpURLConnection.getResponseCode(HttpURLConnection.java:373)

at sun.net.www.protocol.https.HttpsURLConnectionImpl.getResponseCode(HttpsURLConnectionImpl.java:318)

Caused by: java.security.cert.CertificateException: No subject alternative DNS name matching media.mydrifts.com.s3.amazonaws.com found.

at sun.security.util.HostnameChecker.matchDNS(HostnameChecker.java:193)

at sun.security.util.HostnameChecker.match(HostnameChecker.java:77)

at com.sun.net.ssl.internal.ssl.X509TrustManagerImpl.checkIden

tity(X509TrustManagerImpl.java:264)

at com.sun.net.ssl.internal.ssl.X509TrustManagerImpl.checkServerTrusted(X509TrustManagerImpl.java:250)

at com.sun.net.ssl.internal.ssl.ClientHandshaker.serverCertificate(ClientHandshaker.java:954)

And here is what happens in wget:

$ wget -nv https://media.mydrifts.com.s3.amazonaws.com/someContent.txt

ERROR: Certificate verification error for media.mydrifts.com.s3.amazonaws.com: unable to get local issuer certificate

ERROR: certificate common name `*.s3.amazonaws.com' doesn't match requested host name `media.mydrifts.com.s3.amazonaws.com'.

To connect to media.mydrifts.com.s3.amazonaws.com insecurely, use `--no-check-certificate'.

Unable to establish SSL connection.

Requesting the https URL using the DNS CNAME images.mydrifts.com results in the same errors, with the messages saying that the certificate *.s3.amazonaws.com does not match the requested host name images.mydrifts.com.

Notice that the browsers and wget clients offer a way to circumvent the mis-matched SSL certificate. You could, theoretically, ask your users to add an exception to the browser’s security settings. However, most web users are scared off by a “This Connection is Untrusted” message, and will turn away when confronted with that screen.

How to Access S3 via https URLs

As pointed out above, there are two forms of S3 URLs that work with https:

https://s3.amazonaws.com/mybucket.mydomain.com/myObjectKey

and this:

https://simplebucketname.s3.amazonaws.com/myObjectKey

So, in order to get https to work seamlessly with your S3 buckets, you need to either:

- choose a bucket whose name contains no periods and use the virtual host URL, such as

https://simplebucketname.s3.amazonaws.com/myObjectKeyor - use the URL form that specifies the bucket name separately, after the host name, like this:

https://s3.amazonaws.com/mybucket.mydomain.com/myObjectKey.

Update 25 Aug 2009: For buckets created via the CreateBucketConfiguration API call, the only option is to use the virtual host URL. This is documented in the S3 docs here.

38 replies on “Amazon S3 Gotcha: Using Virtual Host URLs with HTTPS”

I always enjoy learning how other people employ Amazon S3 online storage. I am wondering if you can check out my very own tool CloudBerry Explorer that helps to manage S3 on Windows . It is a freeware. With CloudBerry Explorer PRO you can even connect to FTP accounts

Just came into the same problem with Amazon S3. I had no idea about the method of doing http://s3.amazonaws.com/mybucket.mydomain.com/myObjectKey over the normal one.

Thanks

I realize this post is kind of old, but I thought I would add a little bit of info to this thread. It’s not so much a decision of browser implementors for this use case. The spec ( http://www.ietf.org/rfc/rfc2818.txt ) specifically states that foo.a.com matches *.a.com, and foo.bar.a.com does not.

There’s also the possibility of CA’s offering certificates that match to *.*.(yadda yadda), but that would undercut their sales of *.foo.a.com certs, wouldn’t it?

@Sean Fitzgerald,

True, the RFC specifies the behavior, but browsers are not always fully RFC compliant. Older versions of Firefox used to happily accept a certificate issued to

*.mydomain.comfor a request to*.subdomain.mydomain.com.I have to apply ssl on s3 bucket. but i don’t where i store my ssl certificate on s3.

@Ashok,

For content hosted in S3 you don’t use your site’s SSL certificate: Amazon uses its own SSL certificate for serving S3-based content. That’s one of the reasons why you need to use the techniques described in this article.

Thanks for posting an article that’s still useful 2 years later. I think the amazon docs link has changed, I believe the new link is:

http://docs.amazonwebservices.com/AmazonS3/latest/dev/index.html?BucketConfiguration.html

@Callum,

Thanks. I have updated the article with the corrected link.

Thanks, this article was very helpful in identifying the problem I was having when using a bucket on S3 with a period (.) in the bucket name.

The error I was getting:

The certificate is only valid for the following domain names:

*.s3.amazonaws.com , s3.amazonaws.com

I had wondered if the extra space before the comma in the above error had mean a trailing space had been inserted in the certificate details, and could have spent a long time on a wild goose chase, but your article saved me from that.

I tried placing my bucket name with the period after the trailing slash (the first option you suggest), but got the error:

The bucket you are attempting to access must be addressed using the specified endpoint. Please send all future requests to this endpoint.

So in the end I took the simple option of creating a new bucket with no period in the name, and that’s worked fine.

cheers

Garve

@Garve,

I’m glad this article saved you time.

The error you mention, “The bucket you are attempting to access must be addressed using the specified endpoint. Please send all future requests to this endpoint.” happens when you use the wrong region’s endpoint to access a bucket that was created in a different region. Perhaps you created the bucket in the EU region and were trying to use the .s3.amazonaws.com endpoint?

This page in the S3 docs explains as follows:

I think you’re right. It’s an EU bucket, but I was using S3Fox (Firefox plugin) and the URL it generates uses the main Amazon S3 root domain instead of the correct one for the bucket.

I tried again using the Amazon Console, and it gave me the correct https://s3-eu-west-1.amazonaws.com URL.

cheers

Garve

For US standard it is “s3.amazonaws.com” as per their endpoint documentation in http://docs.aws.amazon.com/general/latest/gr/rande.html

I would like to connect to S3 without a certificate and wonder if there is a way to do this.

@jeff,

You don’t need your own certificate to use S3 via SSL. Just use “https://” when you talk directly to S3, or use the “secure” connection methods provided by your S3 client library.

Thanks for your swift response.

I am using the AWS SDK to connect to S3 and keep getting a signature does not match message.

I am trying to “ignore” the certificate problem as described here:

http://stackoverflow.com/questions/1219208/is-it-possible-to-get-java-to-ignore-the-trust-store-and-just-accept-whatever

but I am now trying to modify the HttpClient class of the AWS SDK which I am finding complex.

I am using “https://” to talk to S3 as far as I can tell.

Hi,

I am badly stuck , on my local machine, everything works but on the windows server where it fails with the following message:

javax.servlet.ServletException: com.amazonaws.AmazonClientException: Unable to execute HTTP request: Connection to https://cordellbucket.s3.amazonaws.com refused

I am running a simple program to upload to S3 bucket.

AmazonS3 s3Client = new AmazonS3Client(new PropertiesCredentials(

this.getClass().getClassLoader().getResourceAsStream(

“AwsCredentials.properties”)));

// Create a list of UploadPartResponse objects.

List partETags = new ArrayList();

// Step 1: Initialize.

System.out.println(“Step 1: Initialize–>>-.”);

InitiateMultipartUploadRequest initRequest = new

InitiateMultipartUploadRequest(existingBucketName, newKeyName1);

System.out.println(“Request created”);

InitiateMultipartUploadResult initResponse =

s3Client.initiateMultipartUpload(initRequest);

System.out.println(“Response created”);

File file = new File(filePath);

long contentLength = file.length();

long partSize = 5242880; // Set part size to 5 MB.

try

{

// Step 2: Upload parts.

System.out.println(“Step 2: Upload parts.”);

long filePosition = 0;

for (int i = 1; filePosition < contentLength; i++)

{

// Last part can be less than 5 MB. Adjust part size.

partSize = Math.min(partSize, (contentLength – filePosition));

// Create request to upload a part.

UploadPartRequest uploadRequest = new UploadPartRequest()

.withBucketName(existingBucketName).withKey(newKeyName1)

.withUploadId(initResponse.getUploadId()).withPartNumber(i)

.withFileOffset(filePosition)

.withFile(file)

.withPartSize(partSize);

// Upload part and add response to our list.

partETags.add(

s3Client.uploadPart(uploadRequest).getPartETag());

filePosition += partSize;

}

// Step 3: complete.

System.out.println("Step 3: complete.");

CompleteMultipartUploadRequest compRequest = new

CompleteMultipartUploadRequest(

existingBucketName,

newKeyName1,

initResponse.getUploadId(),

partETags);

CompleteMultipartUploadResult cmur = s3Client.completeMultipartUpload(compRequest);

System.out.println("ETag – " + cmur.getETag());

System.out.println("Get Location – " + cmur.getLocation());

Please Help.

@Smita,

Usually that type of error is transient and goes away. Retry it.

By the way, when I visit your bucket in a web browser by clicking on the link, it lists the contents of the bucket. Do you intend for your bucket contents to be publicly readable? If not, set the security appropriately. See http://docs.aws.amazon.com/AmazonS3/latest/UG/EditingBucketPermissions.html for more details.

Hey I load data from S3 using pig. As and when i load i get http request warning messages. these warning messages run for nearly 30 mins. How can i avoid those warning messages? My data size is 400mb

thanks in advance

@Saranya,

What do the messages say?

Are you able to access the objects in that bucket from a simple script that uses the S3 API?

I am using jets3t jar. i have also specified the s3 credentials in the config file. Whenever i try to load s3 data using pig i get the following warning message.

WARN org.jets3t.service.impl.rest.httpclient.RestS3Service – Response ‘/system%2Fmetrics%2F2013%2F08%2F24%2F420’ – Unexpected response code 404, expected 200

@Saranya,

Perhaps the Jets3t mailing list is the best place to ask your question: https://groups.google.com/forum/#!forum/jets3t-users . If that is an ignorable warning, that mailing list can help you turn it off.

Thanks Shlomo, but I am facing this issue from the past 4 days, and did not got any work around.

Its working perfectly on my local but on windows server its giving the error, don’t know either its related to any of the jars or any firewall issue, not able to understand.

Anyways thanks for telling me the permissions, actually I am new to Amazon so not at all aware about all this.

If you could please help me in that error , that would be much appreciated.

Thanks alot.

Cheers,

Smita

@Smita,

The place for that kind of support is the AWS EC2 Developer Forums. https://forums.aws.amazon.com/forum.jspa?forumID=30#

Hi,

I am wondering if this is still the case if using CloudFront?

@Julian,

CloudFront has similar issues. You need to use the Custom SSL Certificates for CloudFront feature to serve HTTPS content using your own CNAME. Otherwise, you have to use the CloudFront distribution’s endpoint to serve HTTPS.

ey man , I’m triyng to connect to s3.amazonaws.com and I’m getting this error.

Could not connect to “s3.amazonaws.com”

XML parsing error.

I cant find any solution on google. thnx in advance

@Qiqa,

Does this happen consistently?

What client are you using to connect – browser, command-line, etc.?

@Swidler

I’m using “ForkLift”, currently it’s stuck on this error and I cannot continue. It’s my first time experiencing this kind of error.

@Qiqa,

This sounds like something the ForkLift support folks should be able to help you with. http://www.binarynights.com/forklift/support

@Shlomo Swidler

Seems like its a problem with the Wifi here at the office , I was able to use it at home with my own network. Thank you anyway.

Thanks very much~

Hi,

I’m able to upload files to S3 using the aws command line tool, but not from a java program using AmazonS3Client. The 3 java program contains the following:

AWSCredentials credentials = new BasicAWSCredentials("abc", "xyz");AmazonS3Client amazonS3Client = new AmazonS3Client(credentials);

amazonS3Client.putObject(new PutObjectRequest("my-bucket", "/dir1/f1", new File("/usr/myfile1")));

I get a stack trace as follows:

Exception in thread “main” com.amazonaws.AmazonClientException: Unable to execute HTTP request: sun.security.validator.ValidatorException: No trusted certificate found

at com.amazonaws.http.AmazonHttpClient.executeHelper(AmazonHttpClient.java:471)

at com.amazonaws.http.AmazonHttpClient.execute(AmazonHttpClient.java:295)

at com.amazonaws.services.s3.AmazonS3Client.invoke(AmazonS3Client.java:3699)

at com.amazonaws.services.s3.AmazonS3Client.putObject(AmazonS3Client.java:1434)

at xxx.xxx.xxx.xxxedge.S3Target.main(S3Target.java:114)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:601)

at com.intellij.rt.execution.application.AppMain.main(AppMain.java:120)

Caused by: javax.net.ssl.SSLHandshakeException: sun.security.validator.ValidatorException: No trusted certificate found

at sun.security.ssl.Alerts.getSSLException(Alerts.java:192)

at sun.security.ssl.SSLSocketImpl.fatal(SSLSocketImpl.java:1886)

at sun.security.ssl.Handshaker.fatalSE(Handshaker.java:276)

at sun.security.ssl.Handshaker.fatalSE(Handshaker.java:270)

at sun.security.ssl.ClientHandshaker.serverCertificate(ClientHandshaker.java:1341)

at sun.security.ssl.ClientHandshaker.processMessage(ClientHandshaker.java:153)

at sun.security.ssl.Handshaker.processLoop(Handshaker.java:868)

at sun.security.ssl.Handshaker.process_record(Handshaker.java:804)

at sun.security.ssl.SSLSocketImpl.readRecord(SSLSocketImpl.java:1016)

at sun.security.ssl.SSLSocketImpl.performInitialHandshake(SSLSocketImpl.java:1312)

at sun.security.ssl.SSLSocketImpl.startHandshake(SSLSocketImpl.java:1339)

at sun.security.ssl.SSLSocketImpl.startHandshake(SSLSocketImpl.java:1323)

at org.apache.http.conn.ssl.SSLSocketFactory.connectSocket(SSLSocketFactory.java:534)

at org.apache.http.conn.ssl.SSLSocketFactory.connectSocket(SSLSocketFactory.java:402)

at com.amazonaws.http.conn.ssl.SdkTLSSocketFactory.connectSocket(SdkTLSSocketFactory.java:112)

at org.apache.http.impl.conn.DefaultClientConnectionOperator.openConnection(DefaultClientConnectionOperator.java:178)

at org.apache.http.impl.conn.ManagedClientConnectionImpl.open(ManagedClientConnectionImpl.java:304)

at org.apache.http.impl.client.DefaultRequestDirector.tryConnect(DefaultRequestDirector.java:610)

at org.apache.http.impl.client.DefaultRequestDirector.execute(DefaultRequestDirector.java:445)

at org.apache.http.impl.client.AbstractHttpClient.doExecute(AbstractHttpClient.java:863)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:82)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:57)

at com.amazonaws.http.AmazonHttpClient.executeOneRequest(AmazonHttpClient.java:685)

at com.amazonaws.http.AmazonHttpClient.executeHelper(AmazonHttpClient.java:460)

… 9 more

Caused by: sun.security.validator.ValidatorException: No trusted certificate found

at sun.security.validator.SimpleValidator.buildTrustedChain(SimpleValidator.java:384)

at sun.security.validator.SimpleValidator.engineValidate(SimpleValidator.java:134)

at sun.security.validator.Validator.validate(Validator.java:260)

at sun.security.ssl.X509TrustManagerImpl.validate(X509TrustManagerImpl.java:326)

at sun.security.ssl.X509TrustManagerImpl.checkTrusted(X509TrustManagerImpl.java:231)

at sun.security.ssl.X509TrustManagerImpl.checkServerTrusted(X509TrustManagerImpl.java:126)

at sun.security.ssl.ClientHandshaker.serverCertificate(ClientHandshaker.java:1323)

… 28 more

@Ken,

Glad to see you found the resolution on the AWS Forums https://forums.aws.amazon.com/thread.jspa?messageID=590161&tstart=0.

Think I found a fix.

Looks like default client behavior is to use https. To use http you must add the following line.

amazonS3Client.setEndpoint(“http://s3.amazonaws.com”);

Great article… I was looking for an answer for this behavior and I couldn’t find it till I came to your post.

Thank you so much! This was helpful.

[…] this is expected because amazon’s s3 certificate only covers one level of subdomains (http://shlomoswidler.com/2009/08/amazon-s3-gotcha-using-virtual-host.html). Therefore, I changed the URL I’m uploading to to be https://s3.amazonaws.com/bucket.name, […]

For those who want to access an s3 bucket using SSL via their own domain name, one solution that has become very simple to use is to set up a cloudfront distribution.

Cloudfront supports ssl certificates and custom domains via CNAMEs – and Amazon now will generate SSLs for your domains for free (certificate manager) if you’re serving them from cloudfront (or an ec2 load balancer).

How To

1) create an s3 bucket, put your content in. You can keep it private – there will be no publicly accessible s3.amazonaws.com URLs.

2) go to cloudfront and set up an origin access identity.

3) Now create a distribution with the s3 bucket as the origin and in the settings tell it to automatically set the bucket policy with the origin access identity you just created.

4) While setting up your distribution there will be an option for an SSL certificate. Unless you work with users who have 10+ year old computers, you can safely use the SNI (free) option and create a certificate for free with AWS certificate manager.

5) Once this is all set up, you can point yourdomain.com at the cloudfront endpoint via a CNAME entry in your DNS server (if you use route 53 this is very easy).

When finished, you should be able to go to https://yourdomain.com and you should get an encrypted connection without browser ssl errors.