Dynamic infrastructure can be a pain to accommodate in applications. How do you keep track of the set of web servers in your dynamically scaling web farm? How do your apps keep up with which server is currently running what service? How can applications be written so they don’t need to care if a service gets moved to a different machine? There are a number of techniques available, and I’m happy to share implementation code for one that I’ve found useful.

One thing common to all these techniques: they all allow the application code to refer to services by name instead of IP address. This makes sense because the whole point is not to care about the IP address running the service. Every one of these techniques offers a way to translate the name of the service into an IP address behind the scenes, without your application knowing about it. Where the techniques differ is in how they provide this indirection.

Note that there are four usage scenarios that we might want to support:

- Service inside the cloud, client inside the cloud

- Service inside the cloud, client outside the cloud

- Service outside the cloud, client inside the cloud

- Service outside the cloud, client outside the cloud

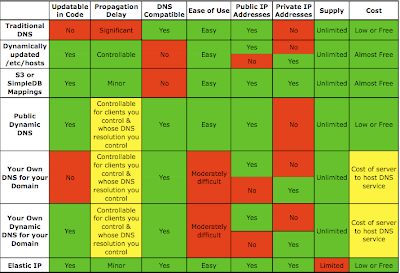

Let’s take a look at a few techniques to provide loose coupling between dynamically movable services and their IP addresses, and see how they can support these usage scenarios.

Dynamic DNS

Dynamic DNS is the classic way of handling dynamically assigned roles: DNS entries on a DNS server are updated via an API (usually HTTP/S) when a server claims a given role. The DNS entry is updated to point to the IP address of the server claiming that role. For example, your DNS may have a production-master-db.example.com record. When the production deployment’s master database starts up it can register itself with the DNS provider to claim the production-master-db.example.com dns record, pointing that DNS entry to its own IP address. Any client of the database can use the host name production-db-master.example.com to refer to the master database, and as long as the machine that last claimed that DNS entry is still alive, it will work.

When running your service within EC2, Dynamic DNS servers running outside EC2 will see the source IP address for the Dynamic DNS registration request as the public IP address of the instance. So if your Dynamic DNS is hosted outside EC2 you can’t easily register the internal IP addresses. Often you want to register the internal IP address because from within the same EC2 region it costs less to use the private IP address than the public IP addresses. One way to use Dynamic DNS with private IPs is to build your own Dynamic DNS service within EC2 and set up all your application instances to use that DNS server for your domain’s DNS lookups. When instances register with that EC2-based DNS server, the Dynamic DNS service will detect the source of the registration request as being the internal IP address for the instance, and it will assign that internal IP address to the DNS record.

Another way to use Dynamic DNS with internal IP addresses is to use DNS services such as DNSMadeEasy whose API allows you to specify the IP address of the server in the registration request. You can use the EC2 instance metadata to discover your instance’s internal IP address via the URL http://169.254.169.254/latest/meta-data/local-ipv4 .

Here’s how Dynamic DNS fares in each of the above usage scenarios:

Scenario 1: Service in the cloud, client inside the cloud: Only if you run your own DNS inside EC2 or use a special DNS service that supports specifying the internal IP address.

Scenario 2: Service in the cloud, client outside the cloud: Can use public Dynamic DNS providers.

Scenario 3: Service outside the cloud, client inside the cloud: Can use public Dynamic DNS providers.

Scenario 4: Service outside the cloud, client outside the cloud: Can use public Dynamic DNS providers.

Update window: Changes are available immediately to all DNS servers that respect the zero TTL on the Dynamic DNS server (guaranteed only for Scenario 1). DNS propagation delay penalty may still apply because not all DNS servers between the client and your Dynamic DNS service necessarily respect TTLs properly.

Pros: For public IP addresses only, easy to integrate into existing scripts.

Cons: Running your own DNS (to support private IP addresses) is not trivial, and introduces a single point of failure.

Bottom line: Dynamic DNS is useful when both the service and the clients are in the cloud; and for other usage scenarios if a DNS propagation delay is acceptable.

Elastic IP Addresses

In AWS you can have an Elastic IP address: an IP address that can be associated with any instance within a given region. It’s very useful when you want to move your service to a different instance (perhaps because the old one died?) without changing DNS and waiting for those changes to propagate across the internet to your clients. You can put code into the startup sequence of your instances that associates the desired Elastic IP address, making this approach very scriptable. For added flexibility you can write those scripts to accept configurable input (via settings in the user-data or some data stored in S3 or SimpleDB) that specifies which Elastic IP address to associate with the instance.

A cool feature of Elastic IP addresses: if clients use the DNS name of the IP address (“ec2-1-2-3-4.compute-1.amazonaws.com”) instead of the numeric IP address you can have extra flexibility: clients within EC2 will get routed via the internal IP address to the service while clients outside EC2 will get routed via the public IP address. This seamlessly minimizes your bandwidth cost. To take advantage of this you can put a CNAME entry in your domain’s DNS records.

Summary of Elastic IP addresses:

Scenario 1: Service in the cloud, client inside the cloud: Trivial, client should use Elastic IP’s DNS name (or set up a CNAME).

Scenario 2: Service in the cloud, client outside the cloud: Trivial, client should use Elastic IP’s DNS name (or set up a CNAME).

Scenario 3: Service outside the cloud, client inside the cloud: Elastic IPs do not help here.

Scenario 4: Service outside the cloud, client outside the cloud: Elastic IPs do not help here.

Update window: Changes are available in under a minute.

Pros: Requires minimal setup, easy to script.

Cons: No support for running the service outside the cloud.

Bottom line: Elastic IPs are useful when the service is inside the cloud and an approximately one minute update window is acceptable.

Generating Hosts Files

Before the OS queries DNS for the IP address of a hostname it checks in the hosts file. If you control the OS of the client you can generate the hosts file with the entries you need. If you don’t control the OS of the client then this technique won’t help.

There are three important ingredients to get this to work:

- A central repository that stores the current name-to-IP address mappings.

- A method to update the repository when mappings are updated.

- A method to regenerate the hosts file on each client, running on a regular schedule.

The central repository can be S3 or SimpleDB, or a database, or security group tags . If you’re concerned about storing your AWS access credentials on each client (and if these clients are web servers then they may not need your AWS credentials at all) then the database is a natural fit (and web servers probably already talk to the database anyway).

If your service is inside the cloud and you want to support clients both inside and outside the cloud you’ll need to maintain two separate repository tables – one containing the internal IP addresses of the services (for use generating the hosts file of clients inside the cloud) and the other containing the public IP addresses of the services (for use generating the hosts file of clients outside the cloud).

Summary of Generating Hosts Files:

Scenario 1: Service in the cloud, client inside the cloud: Only if you control the client’s OS, and register the service’s internal IP address.

Scenario 2: Service in the cloud, client outside the cloud: Only if you control the client’s OS, and register the service’s public IP address.

Scenario 3: Service outside the cloud, client inside the cloud: Only if you control the client’s OS.

Scenario 4: Service outside the cloud, client outside the cloud: Only if you control the client’s OS.

Update Window: Controllable via the frequency with which you regenerate the hosts file. Can be as short as a few seconds.

Pros: Works on any client whose OS you control, whether inside or outside the cloud, and with services either inside or outside the cloud. And, assuming your application already uses a database, this technique adds no additional single points of failure.

Cons: Requires you to control the client’s OS.

Bottom line: Good for all scenarios where the client’s OS is under your control and you need refresh times of a few seconds.

A Closer Look at Generating Hosts Files

Here is an implementation of this technique using a database as the repository, using Java wrapped in a shell script to regenerate the hosts file, and using Java code to perform the updates. This implementation was inspired by the work of Edward M. Goldberg of myCloudWatcher.

Creating the Repository

Here is the command to create the necessary database (“Hosts”) and table (“hosts”):

mysql -h dbHostname -u dbUsername -pDBPassword -e \

'CREATE DATABASE IF NOT EXISTS Hosts; \

USE Hosts; \

DROP TABLE IF EXISTS \`hosts\`; \

CREATE TABLE \`hosts\` ( \

\`record\` TEXT \

) DEFAULT CHARSET=latin1; \

INSERT INTO \`hosts\` VALUES ("127.0.0.1 localhost localhost.localdomain");'

Notice that we pre-populate the repository with an entry for “localhost”. This is necessary because the process that updates the hosts file will completely overwrite the old one, and that’s where the localhost entry is supposed to live. Removing the localhost entry could wreak havoc on networking services – so we preserve it by ensuring a localhost entry is in the repository.

Updating the Repository

To claim a certain role (identified by a hostname – in this example “webserver1” – with an IP address 1.2.3.4) it is registered in the repository. Here’s the one-liner:

mysql -h dbHostname -u dbUsername -pDBPassword -e \

'DELETE FROM Hosts.\`hosts\` WHERE record LIKE "% webserver1"; \

INSERT INTO Hosts.\`hosts\` (\`record\`) VALUES ("1.2.3.4 webserver1");'

The registration process can be performed on the client itself or by an outside agent. Make sure you substitute the real host name and the correct IP address.

On an EC2 instance you can get the private and public IP addresses of the instance via the instance metadata URLs. For example:

$ privateIp=$(curl --silent http://169.254.169.254/latest/meta-data/local-ipv4)

$ echo $privateIp

10.209.206.223

$ publicIp=$(curl --silent http://169.254.169.254/latest/meta-data/public-ipv4)

$ echo $publicIp

75.101.198.120

Regenerating the Hosts File

The final piece is recreating the hosts file based on the contents of the database table. Notice how the table records are already in the correct format for a hosts file. It would be simple to dump the output of the entire table to the hosts file:

mysql -h dbHostname -u dbUsername -pDBPassword --silent --column-names=0 -e \

'SELECT \`record\` FROM Hosts.\`hosts\`' | uniq > /etc/hosts # This is simple and wrong

But it would also be wrong to do that! Every so often the database connection might fail and you’d be left with a hosts file that was completely borked – and that would prevent the client from properly resolving the hostnames of your services. It’s safer to only overwrite the hosts file if the SQL query actually returns results. Here’s some Java code that does that:

Class.forName("com.mysql.jdbc.Driver").newInstance();

Connection conn = DriverManager.getConnection("jdbc:mysql://" + dbHostname + "/?user=" +

dbUsername + "&password=" + dbPassword);

String outputFileName = "/etc/hosts";

Statement stmt = conn.createStatement();

ResultSet res = stmt.executeQuery("SELECT record FROM Hosts.host");

HashSet<String> uniqueMe = new HashSet<String>();

PrintStream out = System.out;

if (res.isBeforeFirst()) {

out = new PrintStream(outputFileName);

}

while (res.next()) {

String record = res.getString(1);

if (uniqueMe.add(record)) {

out.println(record);

}

}

out.println();

out.close();

res.close();

stmt.close();This code uses the MySQL Connector/J JDBC driver. It makes sure only to overwrite the hosts file if there were actual records returned from the database query.

Scheduling the Regeneration

Now that you have a script that regenerates that hosts file (you did wrap that Java program into a script, right?) you need to place that script on each client and schedule a cron job to run it regularly. Via cron you can run it as often as every minute if you want – it adds a negligible amount of load to the database server so feel free – but if you need more frequent updates you’ll need to write your own driver to call the regeneration script more frequently.

If you find this technique helpful – or have any questions about it – I’d be happy to hear from you in the comments.

Update December 2010: Guy Rosen guest-authored this article on using AWS’s DNS service Route 53 to track instances.