EBS offers the ability to have a “virtual disk drive” whose lifetime is independent of any EC2 instance. You can attach EBS drives to any running instance, and any changes made to the drive will persist even after the instance is terminated. You can also set up an EBS drive to be the root filesystem for an instance – giving you the benefits of an always-on instance but without paying for it when it’s not in use – and this article shows you how to do that. I also explore how to estimate the cost savings you can achieve using this solution.

I’m not the first person to think of this idea: credit goes to AWS forum users rickdane for starting the discussion and N. Martin and Troy Volin for posts in this AWS forum thread that describe most of the heavy-lifting – this solution is largely based on their work.

Update December 2009: With the introduction of native support for boot-from-EBS AMIs, the techniques in this article are largely unnecessary. But they’re still pretty cool.

Note: this article assumes you are comfortable in the linux shell and familiar with the EC2 AMI tools and EC2 API tools.

Why Boot EC2 Instances from EBS?

My company runs applications in EC2, and we test new application features in EC2 (after testing them locally) before we deploy them to our production environment. At first, each time we had a new feature to test out, we would construct a testing environment as follows:

- Launch a new instance of our base AMI (based on an Alestic Ubuntu Hardy Server AMI, which I highly recommend, and customized with our own basic application server stack).

- Make the necessary environmental changes (e.g. adding monitoring services) to the test instance.

- Deploy the the application (the one with new features we need to test) to the test instance.

- Deploy the test database from S3 to the test instance.

- Update the test database with any schema or data changes necessary.

- Test and debug the application.

- Save the updated test database to S3 for later use.

- Terminate the test instance.

This process worked great at first (even if it was a manual process). Our application is a WAR and a bunch of jar files, so they were easily uploaded to the test instance. We stored our test database in S3 as a gzipped MySQL mysqldump file, and it was easy to import into / export from MySQL. That is, until we got to the point where our test database was big enough for it to take a long time – over an hour – to reconstitute. At that point it became very annoying to bring up the test environment.

The last thing you want, as a developer, is a test environment that is difficult or annoying to set up. You want testing to be easy, quick, and inexpensive (otherwise you will start looking for shortcuts to avoid testing, which is not good for quality). Once our test environment began to require almost an hour just to set up, we realized it was no longer serving our needs. I began researching a setup that:

- Is ready-to-go within a short time (less than five minutes)

- Contains the most-recent environment (tools, application, and database) already

- Only costs money when it is being used

Basically, we wanted the benefits of a server that is always-on but without paying for it when we weren’t using it.

That’s the “why”. Read on for the “what” and the “how”.

Ingredients and Tools

The solution consists of two pieces:

- An AMI that can boot from an EBS drive (the “boot AMI”)

- An EBS drive that contains the bootable linux stack and, later, anything else you add (the “bootable EBS volume”)

The main tool used to put the pieces together is the linux utility pivot_root. This program can only be run by the /sbin/init process (the startup process itself, pid 1), and it “swaps” the root filesystem out from under the OS and replaces it with a directory you specify. This will be the root directory of the EBS drive.

EBS volumes are attached to specific devices (/dev/sdb through /dev/sdp), and you’ll need to choose what attach device you want the boot AMI to use. We could, theoretically, build the boot AMI to read the attach device name from the user-data provided at launch time. But this would require bringing up networking in order to download the user-data to the instance, which is complicated. Instead of doing this we will hardcode the attach device into the boot AMI. You will need to remember what device you chose in order to know where to attach the EBS drive when you launch an instance of the boot AMI. A good way to remember the chosen attach device is to name the boot AMI accordingly, for example boot-from-EBS-to-dev-sdp-32bit-20090624.

As part of choosing the attach device you’ll need to know the mknod minor number associated with the device. mknod minor numbers begin at 0 (for /dev/sda) and progress in increments of 16 (so /dev/sdb is number 16, /dev/sdc is number 32, etc.) until /dev/sdp (which is number 240). mknod minor numbers are detailed in the Documentation/devices.txt file in the linux kernel source code. In the procedure below I use /dev/sdp (“p” for “pivot_root”), with mknod minor number 240.

The EC2 AMI tools are useful in preparing the bootable EBS drive: they can create an image file from an existing instance. These tools will be used to create a temporary image containing the bootable linux stack copied from the running instance, and this temporary image will then be copied to the EBS drive to make it bootable.

How to Set it Up

Here is an outline of the setup process:

- Set up a bootable EBS volume.

- Set up the boot AMI.

The detailed setup instructions follow.

Setting up a bootable EBS volume:

- Launch an instance of an AMI you like. I use the Alestic Ubuntu Hardy Server AMI.

- Create an EBS volume in the same availability zone as the instance you launched. This volume should be large enough to fit everything you plan to put on the root partition (later, not now). It can even exceed 10GB: unlike the “real” root filesystem on EC2 instances which is limited to 10GB, the bootable EBS volume is not limited to this size.

- Once the EBS volume is created, attach it to the instance on

/dev/sdp. - SSH into the instance as

rootand format the EBS volume:

mkfs.ext3 /dev/sdp - Mount the EBS volume to the instance’s filesystem:

mkdir /ebs && mount /dev/sdp /ebs - Make an image bundle containing the root filesystem:

mkdir /mnt/prImage

ec2-bundle-vol -c cert -k key -u user -e /ebs -r i386 -d /mnt/prImage

These arguments are the same as you would use to bundle an AMI – please see the Developer Guide: Bundling a Unix or Linux AMI for details. The certificate and private key credentials should be copied to the instance in the/mntpartition so they don’t get bundled into the image and copied to the bootable EBS volume. If you’re creating a 64-bit image you’ll need to substitute-r x86_64instead. - Copy the contents of the image to the EBS drive:

mount -o loop /mnt/prImage/image /mnt/img-mnt

rsync -a /mnt/img-mnt/ /ebs/

umount /mnt/img-mnt - Prepare the EBS volume for being the target of

pivot_root. First, edit/ebs/etc/fstab, changing the entry for/(the root filesystem) from/dev/sda1to/dev/sdp. Next,

mkdir /ebs/old-root

rm /ebs/root/.ssh/authorized_keys

umount /ebs

rmdir /ebs - Detach the EBS volume from your instance.

Your bootable EBS volume is now ready. You might want to take a snapshot of it. Don’t terminate the EC2 instance yet, because it will be used to create the boot AMI.

Setting up the boot AMI:

- Create the mount point where the bootable EBS will be mounted:

mkdir /new-root - Replace the original

/sbin/initwith a new one. First, rename the original:

mv /sbin/init /sbin/init.old

Then, copy this file into/sbin/init, as follows:

curl -o /sbin/init -L https://sites.google.com/site/shlomosfiles/clouddevelopertips/init?attredirects=0

As mentioned above, if you choose an attach device different than/dev/sdpyou should edit the new/sbin/initfile to assignDEVNOthe corresponding mknod minor number.

Finally, make it executable:

chmod 755 /sbin/init - Clean up the current SSH public key that was used to login to the instance:

rm /root/.ssh/authorized_keys

Not so fast! Once you perform this step you will no longer be able to SSH into the instance. Instead, you can move the SSHauthorized_keysfile out of the way, as follows:

mv /root/.ssh/authorized_keys /mnt - Bundle, upload, and register the AMI. Give the bundle a name that indicates the processor architecture and the device on which it expects the bootable EBS volume to be attached. For example,

boot-from-EBS-to-dev-sdp-32bit-20090624. - If you opted to move the SSH

authorized_keysout of the way in Step 3, restore SSH access to the instance:

mv /mnt/authorized_keys /root/.ssh/authorized_keys

The boot AMI is now ready to be launched. If you are feeling brave you can terminate the instance. Otherwise, you can leave it running and use it to troubleshoot problems with the launch process – but hopefully this won’t be necessary.

How to Launch an Instance

Once the bootable EBS volume and boot AMI are set up, launch instances that boot from the EBS volume as follows:

- Launch an instance of the boot AMI.

- Attach the bootable EBS volume to the chosen device (

/dev/sdpin the above instructions).

The boot AMI will wait until a volume is attached to the chosen device, pivot_root to the EBS volume, and then continue its boot sequence from the EBS volume.

Some troubleshooting tips:

- To troubleshoot problems launching the instance you should look at the console output from the instance. The console output might not get updated consistently. If the console output is still empty a few minutes after launching the instance and attaching the EBS volume, try rebooting the instance, which will force the console to refresh.

- If you need to attach the bootable EBS volume to another instance (not the boot AMI), attach it to

/dev/sdpand mount it as follows:

mkdir /ebs && mount /dev/sdp /ebs - The boot AMI includes a hook that allows you to add a program to be executed before it performs a pivot_root to the EBS drive. This avoids the need to re-bundle the boot AMI if you need to change the startup process. The hook looks for a file called

/pre-pivot.shon the EBS drive and executes the file if it can. - Booting from an EBS drive seems to take slightly longer than booting a regular EC2 instance. Don’t be surprised if it takes up to five minutes until you can get an SSH connection to the instance.

- You don’t need to re-attach the EBS volume when you reboot the instance – it stays attached.

Usage Tips

- Create a file in the root of each bootable EBS volume labeling the purpose of the volume, such as

/bootable-32bit-appserver. This helps when you mount the EBS drive to an instance as a “regular” volume: the label indicates what the purpose of the volume is, and helps you distinguish it from other attached EBS drives. This is good practice even for non-bootable EBS volumes. See below for a tip on how to track the purpose of EBS volumes without looking at their contents. - Once you get the boot AMI running properly with the bootable EBS volume, shut it down and take a snapshot of the volume before you make any other changes to it. This snapshot is your “master bootable volume” snapshot, containing only the minimum setup necessary to boot.

- After creating a “master bootable volume” snapshot you can (and should!) launch the boot AMI again (remembering to attach the bootable EBS volume) and customize the instance any way you want: add your application stack, your database, your tools, anything else. These changes will persist on the bootable EBS volume even after the instance has terminated. This is the main motivation for the bootable EBS volume solution!

- You can create multiple bootable EBS volumes from the “master bootable volume” snapshot and customize them each. See below for a tip on how to keep track of the purpose of each EBS volume.

- The setup instructions above can be used for creating either a 32-bit or a 64-bit boot AMI and matching bootable EBS volume. Because you can’t run an AMI for one architecture on an EC2 instance of the other architecture, you’ll need to create two separate boot AMIs and two separate bootable EBS volumes if you plan to run on both 32-bit and 64-bit EC2 instances. And, because the bootable EBS volumes created by this procedure will contain a 32-bit or 64-bit specific linux stack, be sure to attach the corresponding bootable EBS volume for the boot AMI you launch.

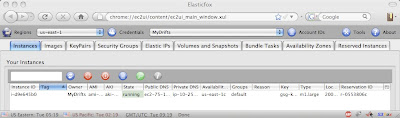

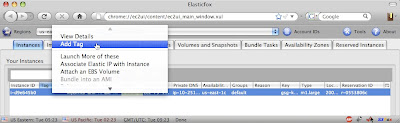

- If you work with multiple EBS volumes you will want to identify the purpose of each volume without attaching it to a running instance and looking at the label file you created. Unfortunately the EC2 API does not currently offer a way to tag EBS volumes. But the ElasticFox Firefox extension does – and I highly recommend it for this purpose. Note that the volume tags will only be visible in the browser that creates them, not on other machines. [See my article on Copying ElasticFox tags between browsers for a workaround.]

Cost Implications of Booting Instances from EBS

EBS costs money beyond what you pay for the disk drives that come as part of EC2 instances. There are four components of the EBS cost to consider:

- Allocated storage size ($0.10 per GB per month)

- I/O requests ($0.10 per million I/O requests)

- Snapshot storage (same as S3 storage costs; depends on the region)

- Snapshot transfer (same as S3 transfer costs; depends on the region)

The AWS Simple Monthly Calculator can help you estimate these costs. Here are some guidelines to help you figure out what numbers to put into these fields:

- Component #1 is easy: just plug in the size of your bootable EBS volume.

- Component #2 should be estimated based on the I/O usage of your existing application instance. You can use

iostatto estimate this number as follows:

iostat | awk -F" " 'BEGIN {x="0.0"} /^sd/ {x=x+$3} END {print x}'

The result is the number of I/O transactions per second. Multiply this figure by 2592000 (60 * 60 * 24 * 30, the number of seconds in a month) to get the number of I/O transactions per month, then divide by 1 million. Or, better yet, use this instead:

iostat | awk -F" " 'BEGIN {x="0.0"} /^sd/ {x=x+$3} END {printf "%12.2f\n", x*2.592}'

For my test environment, this figure comes to 29 (million I/O requests per month). - Component #3 can be estimated with the help of the guidelines at the bottom of the EBS Product Info page:

Snapshot storage is based on the amount of space your data consumes in Amazon S3. Because data is compressed before being saved to Amazon S3, and Amazon EBS does not save empty blocks, it is likely that the size of a snapshot will be considerably less than the size of your volume. For the first snapshot of a volume, Amazon EBS will save a full copy of your data to Amazon S3. However for each incremental snapshot, only the part of your Amazon EBS volume that has been changed will be saved to Amazon S3.

As a conservative estimate of snapshot storage size, I use the same size as the actual data on the EBS volume. I figure this covers the initial compressed snapshot plus a month’s worth of delta snapshots.

- Component #4 can also be estimated from the guidelines further down the same page:

Volume data is broken up into chunks before being transferred to Amazon S3. While the size of the chunks could change through future optimizations, the number of PUTs required to save a particular snapshot to Amazon S3 can be estimated by dividing the size of the data that has changed since the last snapshot by 4MB. Conversely, when loading a snapshot from Amazon S3 into and Amazon EBS volume, the number of GET requests needed to fully load the volume can be estimated by dividing the full size of the snapshot by 4MB. You will also be charged for GETs and PUTs at normal Amazon S3 rates.

My own monthly estimate includes taking one full EBS volume snapshot and creating one EBS volume from a snapshot. I keep around 20GB of data on the EBS volume. So I divide 20480 (20 GB expressed in MB) by 4 to get 5120. This is the estimated number of PUTs per month. It is also the estimated number of GETs per month.

For my usage in our test environment, the cost of running instances that boot from a 50GB EBS volume with 20GB of data on it in the US region comes to approximately $10.96 per month.

Is it financially worthwhile?

As long as your EBS cost estimate is less than the cost of running the instance for the hours it would sit unused, this solution saves you money. My test instance is an m1.large in the US region, which costs $0.40 per hour. If my instance would sit idle for at least (10.96/0.4=) 28 hours a month, I save money by using a bootable EBS volume and terminating the instance when it is unused. As it happens, my test instance is unused more than 360 hours a month, so for me it is a no-brainer: I use bootable EBS volumes.

EC2 instances that boot from an EBS volume offer the benefits of an always-on machine without the costs of paying for it when it is not in use. The implementation presented here was developed based on posts by the aforementioned forum users, and I thank them for their help.