This March 7-10 2011 I will be in Santa Clara for the CloudConnect conference. There are many reasons you should go too, and I’d like to highlight one session that you should not miss: the Platforms and Ecosystems BOF, on Tuesday March 8 at 6:00 – 7:30 PM. Read on for a detailed description of this BOF session and why it promises to be worthwhile.

[Full disclosure: I’m the track chair for the Design Patterns track and I’m running the Platforms and Ecosystems BOF. The event organizers are sponsoring my hotel for the conference, and like all conference speakers my admission to the event is covered.]

CloudConnect 2011 promises to be a high-quality conference, as last year’s was. This year you will be able to learn all about design patterns for cloud applications in the Design Patterns track I’m leading. You’ll also be able to hear from an all-star lineup about many aspects of using cloud: cloud economics, cloud security, culture, risks, and governance, data and storage, devops and automation, performance and monitoring, and private clouds.

But I’m most looking forward to the Platforms and Ecosystems BOF because the format of the event promises to foster great discussions.

The BOF Format

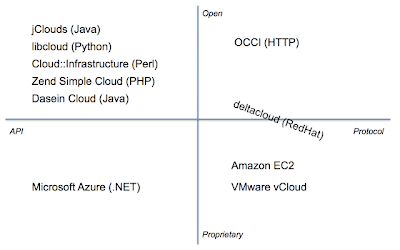

The BOF will be conducted as a… well, it’s hard to describe in words only, so here is a picture:

At three fixed points around the outside of the room will be three topics: Public IaaS, Public PaaS, and Private Cloud Stacks. There will also be three themes which rotate around the room at each interval; these themes are: Workload Portability, Monitoring & Control, and Avoiding Vendor Lock-in. At any one time there will be three discussions taking place, one in each corner, focusing on the particular combination of that theme and topic.

Here is an example of the first set of discussions:

And here is the second set of discussions, which will take place after one “turn” of the inner “wheel”:

And here is the final set of discussions, after the second turn of the inner wheel:

In all, nine discussions are conducted. Here is a single matrix to summarize:

Anatomy of a Discussion

What makes a discussion worthwhile? Interesting questions, focus, and varied opinions. The discussions in the BOF will have all of these elements.

Interesting Questions

The questions above are just “seeder” questions. They are representative questions related to the intersection of each topic and theme, but they are by no means the only questions. Do you have questions you’d like to see discussed? Please, leave a comment below. Better yet, attend the BOF and raise them there, in the appropriate discussion.

Focus

Nothing sucks more than a pointless tangent or a a single person monopolizing the floor. Each discussion will be shepherded by a capable moderator, who will keep things focused on the subject area and encourage everyone’s participation.

Varied Opinions

Topic experts and theme experts will participate in every discussion.

Vendors will be present as well – but the moderators will make sure they do not abuse the forum.

And interested parties – such as you! – will be there too. In unconference style, the audience will drive the discussion.

These three elements all together provide the necessary ingredients to make sure things stay interesting.

At the Center

What, you may ask, is at the center of the room? I’m glad you asked. There’ll be beer and refreshments at the center. This is also where you can conduct spin-off discussions if you like.

The Platforms and Ecosystems BOF at CloudConnect is the perfect place to bring your cloud insights, case studies, anecdotes, and questions. It’s a great forum to discuss the ideas you’ve picked up during the day at the conference, in a less formal atmosphere where deep discussion is on the agenda.

Here’s a discount code for 25% off registration: CNXFCC03. I hope to see you there.